Editorial Disclaimer

The views expressed in articles published on FIRES do not necessarily reflect those of IES or represent endorsement by the IES.

By Ian Ashdown, P. Eng. (Ret.), FIES, Senior Scientist, SunTracker Technologies Ltd.

There is a common-sense argument being presented in the popular media that since humans evolved under sunlight, our bodies must surely make use of all the solar energy available to us. Given that more than 50 percent of this energy is due to near-infrared radiation, we are clearly risking our health and well-being by using LED lighting that emits no near-infrared radiation whatsoever.

Fact or fiction?

To examine this issue, we begin with a few definitions. There are several schemes used to partition the infrared spectrum. ISO 20473, for example, defines near-infrared radiation as electromagnetic radiation with wavelengths ranging from 780 nm to 3.0 μm (ISO 2007). Meanwhile, the CIE divides this into IR-A (780 nm to 1.4 μm) and IR-B (1.4 μm to 3.0 μ), while noting that the borders of near-infrared “necessarily vary with the application (e.g., including meteorology, photochemistry, optical design, thermal physics, etc.)” (CIE 2016).

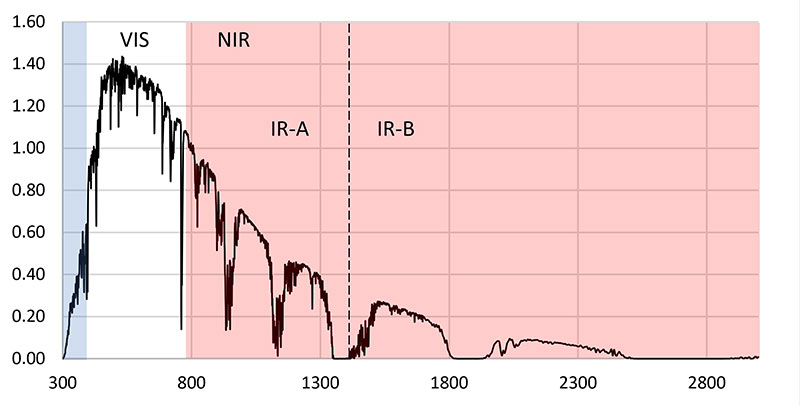

The terrestrial solar spectrum that we are exposed to on a clear day is shown in Figure 1. This varies somewhat depending on the solar elevation, which is in turn dependent on the latitude, time of day, and date. However, Figure 1 is sufficient for discussion purposes.

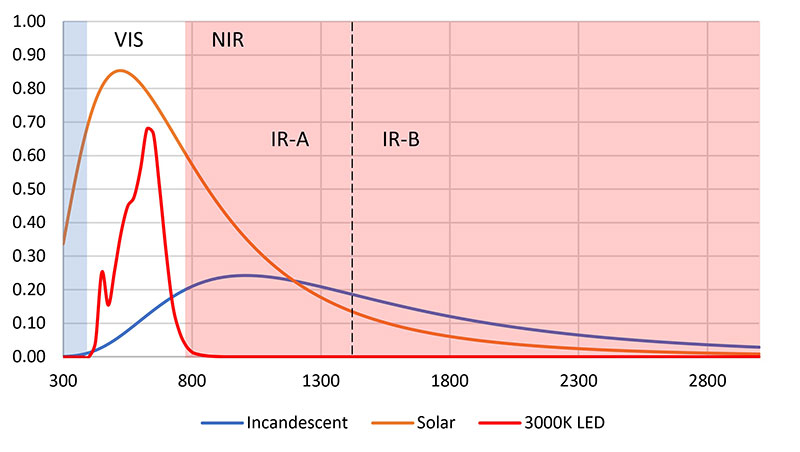

Compared to sunlight, modern-day electric lighting, and in particular LED lighting, is sorely deficient in near-infrared radiation. Figure 2 illustrates the problem, where the terrestrial solar spectrum has been approximated by a blackbody radiator with a color temperature of 5500 K. Look at the spectrum of incandescent lights – they clearly provide the near-infrared radiation that we need. By comparison, 3000-K LEDs (and indeed, any white light LEDs) provide no near-infrared radiation whatsoever.

The same is true, of course, for fluorescent lamps. Given how much time most people spend indoors, we have been depriving ourselves of near-infrared radiation since the introduction of fluorescent lamps in the 1950s!

This is only common sense, but it was also common sense that led Werner von Siemens to proclaim, “Electric light will never take the place of gas!” Common sense notwithstanding, the above two paragraphs are patent nonsense.

A Sense of Scale

Figure 2 is deliberately misleading, even though it was recently published in a trade journal elsewhere without comment. The problem is one of scale. If we go back to the early 1950s with its predominantly incandescent lighting in homes and offices, illuminance levels were on the order of 50 to 200 lux. Meanwhile, outdoor illuminance levels are on the order of 1000 lux for overcast days, and 10,000 to 100,000 lux for clear days.

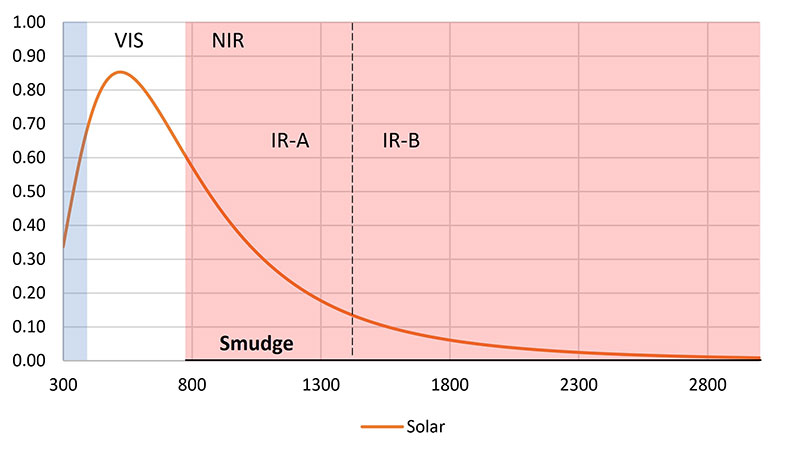

Even on an overcast day, we would have received roughly five to ten times as much near-infrared radiation outdoors as we would have indoors. On a clear day, it would have been five hundred to one thousand times. Given this, properly scaled incandescent and 3000-K LED plots in Figure 2 would both be no more than smudges on the abscissa.

Common sense should also tell us that we have survived quite nicely without near-infrared radiation in our daily lives ever since we began spending our time in offices and factories rather than working in the fields during the day. It does not matter whether the electric light sources are incandescent, fluorescent, or LED – the amount of near-infrared radiation they produce compared to solar radiation is inconsequential.

This does not mean, however, that near-infrared radiation has no effect on our bodies. There are hundreds, if not thousands, of medical studies that indicate otherwise. For lighting professionals, it is therefore important to understand these effects and how they relate to lighting design.

Low Level Light Therapy

Many of the medical studies involving near-infrared radiation concern low level light therapy (LLLT), also known as “low level laser therapy,” “cold laser therapy,” “laser biostimulation,” and most generally, “photobiomodulation.” Using devices with lasers or LEDs that emit visible light or near-infrared radiation, these therapies promise to reduce pain, inflammation, and edema; promote healing of wounds, deeper tissues, and nerves; and prevent tissue damage.

Laser therapy is often referred to as a form of “alternative medicine,” mostly because it is often difficult to quantify its beneficial effects in medical studies. Unfortunately, the popular literature, including magazine articles, personal blogs, product testimonials, and self-help medical websites, often reference these studies as evidence that near-infrared radiation is essential to our health and well-being. In doing so, they overlook two key points: irradiance and dosage.

Irradiance

The adjective “low level” is somewhat of a misnomer, as it is used to distinguish LLLT medical devices from high-power medical lasers used for tissue ablation, cutting, and cauterization. The radiation level (that is, irradiance) is less than that needed to heat the tissue, which is about 100 mW/cm2. By comparison, the average solar IR-A irradiance is around 20 mW/cm2 during the day, with a peak irradiance reaching 40 mW/cm2 (Piazena and Kelleher 2010).

This is not a fair comparison, however. In designing studies to test LLLT hypotheses, there are many parameters that must be considered, including whether to use coherent (laser) or incoherent (LED) radiation, the laser wavelength (or peak wavelength for LEDs), whether to use continuous or pulsed radiation, and the irradiance, target area, and pulse shape. Tsai and Hamblin (2017) correctly noted that if any of these parameters are changed, it may not be possible to compare otherwise similar studies.

Solar near-infrared radiation has its own complexities. In Figure 1, the valleys in the spectral distribution are mostly due to atmospheric absorption by water and carbon dioxide. Further, the spectral distribution itself varies over the course of the day, with relatively more of the visible light being absorbed near sunrise and sunset. Simply saying “solar near-infrared” is not enough when comparing daylight exposure to LLLT study results.

Complicating matters even further is the fact that near-infrared radiation can penetrate from a few millimeters to a centimeter or so through the epidermis and into the dermis, where it is both absorbed and scattered. The radiation is strongly absorbed by water at wavelengths longer than 1150 nm, so there is an “optical window” between approximately 600 nm and 1200 nm where low level light therapy devices operate.

The biochemical details of how near-infrared radiation interacts with the human body are fascinating, with the primary chromophores hemoglobin and melanin absorbing the photons and then undergoing radiationless de-excitation, fluorescence, and other photochemical processes. For our purposes, however, these details are well beyond the focus of this article.

What is of interest, however, is that whatever positive results may be attributed to a given medical study, because of the differences elucidated above it is difficult to compare them with exposure to solar near-infrared radiation. Further, the irradiance levels are considerably higher than what we would experience outdoors on a clear day, and much higher than we would experience indoors, even with incandescent light sources. Given this, it is generally inappropriate to consider LLLT studies as evidence that near-infrared radiation is essential to our health and well-being.

Dosage

The Bunsen-Roscoe law, also known as the “law of reciprocity,” is one of the fundamental laws of photobiology and photochemistry. It states that the biological effect of electromagnetic radiation is dependent only on the radiant energy (stated in joules), and so is independent of the duration over which the exposure occurs. That is, one short pulse of high irradiance is equal one or more long pulses at low irradiance, as long as the energy (duration times irradiance) is the same.

Unfortunately, human tissue does not obey this law. Instead, it exhibits a “biphasic dose response,” where larger doses (i.e., greater irradiance) are often less effective than smaller doses. At higher levels (greater than approximately 100 mW/cm2), the radiant power induces skin hyperthermia (that is, overheating), while at lower levels, there is a threshold below which no beneficial effects are observed (Huang et al. 2009). This is presumably due to various repair mechanisms in response to photo-induced cellular damage.

This is not to say that solar near-infrared radiation may not have a beneficial effect. As an example, a study of wound healing in mice using 670-nm red LEDs demonstrated significant increases in wound closure rates beginning at 8 mW/cm2 irradiance (Lanzafame et al. 2007). This is comparable with an average 20 mW/cm2 solar IR-A irradiance on a clear day. However, this is also orders of magnitude greater than the average irradiance that might be expected indoors from incandescent light sources.

As an aside, it should be noted that treatment of dermatological conditions with sunlight, or heliotherapy, was practiced by ancient Egyptian and Indian healers more than 3,500 years ago (Hönigsmann 2013). However, this involved the entire solar spectrum from 300 nm (UV-B) to 2500 nm (IR-B); it is impossible to relate the effects of such treatments to near-infrared radiation alone.

Near-Infrared Radiation Risks

Based on the evidence of low level light therapy studies, there appears to be scant evidence – if any – that a lack of near-infrared radiation in indoor environments is deleterious to our health and well-being. If anything, the minimum required irradiances and the biphasic dose response argue against it.

There are, in fact, known risks to near-infrared radiation exposure. Erythema ab igne, for example, is a disorder characterized by a patchy discoloration of the skin and other clinical symptoms. It is caused by prolonged exposure to hearth fires, and it is an occupational hazard of glass blowers and bakers exposed to furnaces and hot ovens (e.g., Tsai and Hamblin 2017). It is not a risk to the general population, however, in that the irradiance is usually many times that of solar near-infrared irradiance.

More worryingly, IR-A radiation can penetrate deeply into the skin and cause tissue damage, resulting in photoaging of the skin (Schroeder et al. 2008, Robert et al. 2015), and at worst, possibly skin cancers (e.g., Schroeder et al. 2010, Tanaka 2012). Sunscreen lotions may block ultraviolet radiation that similarly causes photoaging and skin cancers, but they have no effect on near-infrared radiation.

Evolutionary Adaptation

Excess amounts of ultraviolet radiation can cause erythema (sunburn) in the short term, and photoaging and skin cancers in the long term. Curiously, pre-exposure to IR-A radiation preconditions the skin, making it less susceptible to UV-B radiation damage (Menezes et al. 1998). This is probably an evolutionary adaptation, as the atmosphere absorbs and scatters ultraviolet and blue light in the morning hours shortly after sunrise (Barolet et al. 2016). This morning exposure to IR-A radiation is likely taken as a cue to ready the skin for the coming mid-day exposure to more intense ultraviolet and near-infrared radiation. Late afternoon exposure to decreased amounts of ultraviolet radiation may further be taken as a cue to initiate cellular repair of the UV-damaged skin. In this sense then, solar near-infrared radiation is an identified benefit.

Conclusion

So, are we risking our health and well-being by using LED lighting that emits no near-infrared radiation, or is this patent nonsense as stated above? Perhaps surprisingly, the answer is that we do not know.

The above discussion has focused on the effects of near-infrared radiation on the skin and low level light therapy. Given that the irradiances and dosages of LLLT are much greater than those experienced from indoor lighting (including incandescent), it is inappropriate to cite LLLT medical studies in support of near-infrared lighting.

This does not mean, however, that there are not benefits to long-term exposure to near-infrared radiation, or risks from the lack thereof. The problem is in identifying these possible benefits and risks. Without obvious medical consequences, epidemiological studies would need to be designed that eliminate a long list of confounding factors, from light and radiation exposure to diet and circadian rhythms. They would also need to be performed with laboratory animals, as human volunteers are unlikely to agree to completely avoid exposure to daylight for months to years at a time.

In the meantime, we as lighting professionals must work with the best available knowledge. Lacking any credible evidence that very low levels of near-infrared radiation is necessary for our health and well-being, there appears to be no reason not to continue with LED and fluorescent light sources.

References

Barolet D et al. 2016. Infrared and skin: Friend or foe. J Photochem Photobio; B: Biology. 155:78-85.International Commission on Illumination [CIE]. 2016. International Lighting Vocabulary, 2nd ed. CIE DIS 017/E:2016. Vienna, Austria: CIE Central Bureau.

Hönigsmann H. 2013. History of phototherapy in dermatology. Photochem Photobio Sci. 12:16-21.

Huang AC-H et al. 2009. Biphasic dose response in low level light therapy. Dose-Response. 7(4):358-383.

International Organization for Standards [ISO]. 2007. ISO 20473:2007, Optics and Photonics – Spectral Bands. Geneva, Switzerland: ISO.

Lanzafame RJ et al. 2007. Reciprocity of exposure time and irradiance on energy density during photoradiation on wound healing in a murine pressure ulcer model. Lasers in Surg Med. 39(6):534-542.

Piazena H, Kelleher DK. 2010. Effects of infrared-A irradiation on skin: Discrepancies in published data highlight the need for exact consideration of physical and photobiological laws and appropriate experimental settings. Photochem Photobio. 86(3):687-705.

Menezes S et al. 1998. Non-coherent near infrared radiation protects normal human dermal fibroblasts from solar ultraviolet toxicity. J Investig Derm. 111(4):629-633.

Robert C et al. 2015. Low to moderate doses of infrared A irradiation impair extracellular matrix homeostasis of the skin and contribute to skin photodamage. Skin Pharm Physiol. 28:196-204.

Schroeder P et al. 2008. The role of near infrared radiation in photoaging of the skin. Exp Geron. 43(7):629-632.

Schroeder P et al. 2010. Photoprotection beyond ultraviolet radiation – Effective sun protection has to include protection against infrared A radiation-induced skin damage. Skin Pharm Physiol. 23:15-17.

Tanaka Y. 2012. Impact of near-infrared radiation in dermatology. World J Derm. 1(3):30-37.

Tsai S-R, Hamblin MR. 2017. Biological effects and medical applications of infrared radiation. J Photochem Photobio; B: Biology. 170:197-207.